Introduction

Modern AI inference is moving into production at scale. As teams deploy powerful models on platforms like Baseten, observability and monitoring becomes essential to track performance, costs, and reliability.

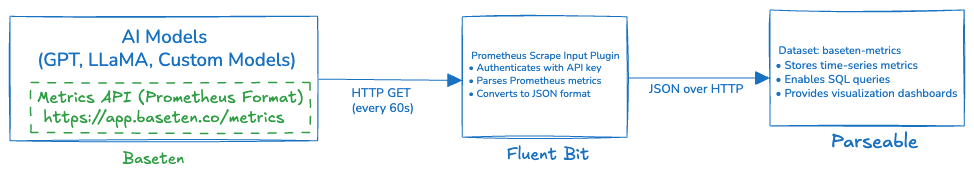

In this post, we'll show you how to set up end‑to‑end metrics collection and monitoring for Baseten using Fluent Bit to scrape metrics from Baseten's API in Prometheus format, and Parseable to store, query, and visualize the data. By the end, you'll have a working monitoring stack for your AI model inference workloads.

Why Monitor Baseten Deployments?

When running AI models in production on Baseten, you need visibility into:

- Performance metrics: Track inference latency, throughput, and response times

- Resource utilization: Monitor GPU/CPU usage, memory consumption, and queue depths

- Cost optimization: Understand usage patterns to optimize your deployment configuration

- Reliability: Detect errors, timeouts, and anomalies before they impact users

Baseten provides a Prometheus-compatible metrics endpoint, making it easy to integrate with modern observability tools. In this guide, we'll build a lightweight monitoring pipeline using Fluent Bit and Parseable.

Architecture Overview

Our monitoring stack consists of three components working together:

Key benefits of this architecture:

- Lightweight: Fluent Bit has minimal resource footprint

- Flexible: Easy to add additional data sources or outputs

- Cost-effective: Parseable stores data efficiently in object storage

- Scalable: Handles high-volume metrics without performance degradation

Prerequisites

Before we begin, ensure you have:

- Baseten Account: Active deployment with models running

- Baseten API Key: Available from your Baseten dashboard

- Parseable Instance: Running locally or in the cloud (installation guide)

- Fluent Bit: Version 2.0 or higher (download here)

Step 1: Configure Fluent Bit

Create a configuration file named fluent-bit-baseten.conf:

[SERVICE]

Flush 5

Daemon Off

Log_Level info

[INPUT]

Name prometheus_scrape

Host app.baseten.co

Port 443

Scrape_Interval 60

Metrics_Path /metrics

HTTP_User ${BASETEN_API_KEY}

HTTP_Passwd ""

tls On

tls.verify On

[OUTPUT]

Name http

Match *

Host localhost

Port 8000

URI /api/v1/ingest

Format json

Header X-P-Stream baseten-metrics

http_User admin

http_Passwd admin

tls OffConfiguration breakdown:

- Scrape_Interval: Set to 60 seconds to respect Baseten's rate limit (6 requests/minute)

- Metrics_Path: Baseten's Prometheus endpoint

- HTTP_User: Your Baseten API key for authentication

- X-P-Stream: The Parseable stream name where metrics will be stored

Step 2: Set Up Environment Variables

Export your Baseten API key as an environment variable:

export BASETEN_API_KEY="your_baseten_api_key_here"Security tip: Never hardcode API keys in configuration files. Use environment variables or a secrets management system.

Step 3: Start the Monitoring Pipeline

Create a startup script start-baseten-monitoring.sh:

#!/bin/bash

# Check if Parseable is running

if ! curl -s http://localhost:8000/api/v1/about > /dev/null; then

echo "Error: Parseable is not running on localhost:8000"

echo "Please start Parseable first"

exit 1

fi

# Check if API key is set

if [ -z "$BASETEN_API_KEY" ]; then

echo "Error: BASETEN_API_KEY environment variable is not set"

exit 1

fi

echo "Starting Baseten metrics collection..."

fluent-bit -c fluent-bit-baseten.confMake it executable and run:

chmod +x start-baseten-monitoring.sh

./start-baseten-monitoring.shYou should see output indicating Fluent Bit is scraping metrics and sending them to Parseable.

Step 4: Verify Data in Parseable

- Open your browser and navigate to

http://localhost:8000 - Log in with your Parseable credentials (default:

admin/admin) - Look for the

baseten-metricsstream in the streams list - Click on the stream to view incoming metrics

You should see metrics flowing in with fields like:

model_inference_latency_ms: Time taken for model inferencerequest_count: Number of requests processederror_rate: Percentage of failed requestsgpu_utilization_percent: GPU usage metricsqueue_depth: Number of requests waiting in queue

Understanding Baseten Metrics

Baseten exposes a comprehensive set of metrics for monitoring your AI deployments:

Performance Metrics

- Inference Latency: Time from request to response

- Throughput: Requests processed per second

- Cold Start Time: Time to initialize a new model instance

Resource Metrics

- GPU Utilization: Percentage of GPU compute being used

- Memory Usage: RAM and VRAM consumption

- CPU Usage: Host CPU utilization

Reliability Metrics

- Error Rate: Failed requests as a percentage of total

- Timeout Rate: Requests that exceeded time limits

- Queue Depth: Backlog of pending requests

For a complete list, refer to Baseten's metrics documentation.

Querying Metrics with SQL

One of Parseable's key features is the ability to query metrics using SQL. Here are some useful queries:

Average Inference Latency Over Time

SELECT

DATE_TRUNC('minute', p_timestamp) AS time_bucket,

AVG(model_inference_latency_ms) AS avg_latency_ms

FROM baseten_metrics

WHERE p_timestamp > NOW() - INTERVAL '1 hour'

GROUP BY time_bucket

ORDER BY time_bucket DESC;Error Rate Analysis

SELECT

DATE_TRUNC('hour', p_timestamp) AS hour,

SUM(CASE WHEN status_code >= 400 THEN 1 ELSE 0 END) * 100.0 / COUNT(*) AS error_rate_percent

FROM baseten_metrics

WHERE p_timestamp > NOW() - INTERVAL '24 hours'

GROUP BY hour

ORDER BY hour DESC;Peak Usage Identification

SELECT

DATE_TRUNC('hour', p_timestamp) AS hour,

MAX(request_count) AS peak_requests,

MAX(gpu_utilization_percent) AS peak_gpu_usage

FROM baseten_metrics

WHERE p_timestamp > NOW() - INTERVAL '7 days'

GROUP BY hour

ORDER BY peak_requests DESC

LIMIT 10;Setting Up Alerts

You can configure alerts in Parseable to notify you of critical issues:

High Error Rate Alert

SELECT COUNT(*) as error_count

FROM baseten_metrics

WHERE p_timestamp > NOW() - INTERVAL '5 minutes'

AND status_code >= 400

HAVING error_count > 10;High Latency Alert

SELECT AVG(model_inference_latency_ms) as avg_latency

FROM baseten_metrics

WHERE p_timestamp > NOW() - INTERVAL '5 minutes'

HAVING avg_latency > 1000;Cost Optimization Tips

Monitoring helps you optimize costs:

- Identify Low-Traffic Periods: Scale down during off-peak hours

- Detect Inefficient Models: Find models with high latency or resource usage

- Optimize Batch Sizes: Analyze throughput vs. latency trade-offs

- Right-Size Instances: Match instance types to actual resource needs

Example: Daily Cost Analysis Query

SELECT

DATE(p_timestamp) AS date,

SUM(request_count) AS total_requests,

AVG(gpu_utilization_percent) AS avg_gpu_usage,

-- Estimate cost based on your Baseten pricing

SUM(request_count) * 0.001 AS estimated_cost_usd

FROM baseten_metrics

WHERE p_timestamp > NOW() - INTERVAL '30 days'

GROUP BY date

ORDER BY date DESC;Troubleshooting

Parseable Connection Issues

Symptom: Fluent Bit cannot connect to Parseable

Solutions:

- Verify Parseable is running:

curl http://localhost:8000/api/v1/about - Check credentials match your Parseable configuration

- Ensure no firewall is blocking port 8000

- Review Fluent Bit logs for specific error messages

No Metrics Appearing

Symptom: Stream exists but no data is flowing

Solutions:

- Verify Baseten API key is valid and has metrics access

- Check Fluent Bit logs for authentication errors

- Ensure your Baseten models are deployed and receiving traffic

- Confirm the metrics endpoint is accessible:

curl -H "Authorization: Bearer $BASETEN_API_KEY" https://app.baseten.co/metrics

Rate Limit Errors (HTTP 429)

Symptom: Baseten returns 429 Too Many Requests

Solutions:

- Keep scrape interval at 60 seconds or higher (Baseten allows 6 requests/minute)

- Check if multiple scrapers are running simultaneously

- Review Baseten's rate limit documentation for your plan tier

High Memory Usage

Symptom: Fluent Bit consuming excessive memory

Solutions:

- Reduce buffer size in Fluent Bit configuration

- Increase flush interval to reduce memory pressure

- Consider filtering out unnecessary metrics

Advanced Configuration

Filtering Specific Metrics

To reduce data volume, filter only the metrics you need:

[FILTER]

Name grep

Match *

Regex __name__ (model_inference_latency_ms|request_count|error_rate)Adding Custom Labels

Enrich metrics with additional context:

[FILTER]

Name modify

Match *

Add environment production

Add region us-west-2Multiple Baseten Deployments

Monitor multiple Baseten accounts by creating separate input sections:

[INPUT]

Name prometheus_scrape

Alias baseten_production

Host app.baseten.co

HTTP_User ${BASETEN_PROD_API_KEY}

Tag baseten.production

[INPUT]

Name prometheus_scrape

Alias baseten_staging

Host app.baseten.co

HTTP_User ${BASETEN_STAGING_API_KEY}

Tag baseten.stagingConclusion

You now have a complete observability solution for your Baseten AI deployments. This setup provides:

- Real-time visibility into model performance and resource usage

- Cost insights to optimize your infrastructure spending

- Reliability monitoring to catch issues before they impact users

- Flexible querying with SQL for custom analysis

The combination of Baseten's metrics API, Fluent Bit's lightweight collection, and Parseable's efficient storage creates a powerful, cost-effective monitoring stack that scales with your AI workloads.

For questions or feedback, join the Parseable community or check out the documentation.