Introduction

Your observability data crosses borders every millisecond. In 2025, that's a compliance nightmare waiting to happen.

As financial regulators enforce DORA and the EU Data Act takes effect, "location-blind" telemetry isn't just risky, it's potentially illegal. Yet most teams still treat data residency as an afterthought, discovering too late that their logs are replicated globally, their metrics cross oceans, and their traces violate sovereignty laws.

The Stakes Are Higher Than Ever

Three critical questions every engineering leader must answer:

- Where does your observability data actually live?

- Who can access it and from where?

- Can you prove compliance when auditors come knocking?

If you hesitated on any of these, you're not alone. Most observability stacks make data residency complex, expensive, and fragile.

There's a Better Way

You don't need to rebuild your entire observability infrastructure. You need a sovereign-by-design approach that makes compliance automatic, not accidental.

This guide shows you exactly how to:

- Build regional data planes that keep data where it belongs

- Configure OpenTelemetry for geographic routing

- Maintain encryption keys within jurisdictional boundaries

- Deploy a solution in minutes, not months

The Regulatory Tsunami: Why 2025 Changes Everything

Two seismic regulatory shifts have transformed data residency from a "nice-to-have" into an existential requirement:

1. DORA: Your Observability Is Now a Compliance Story

Effective January 17, 2025, the EU's Digital Operational Resilience Act fundamentally changes how financial services handle telemetry data. This isn't just about storing logs, it's about proving you can monitor, respond to, and recover from incidents while keeping data within jurisdictional boundaries.

What this means for you?

- Every log, metric, and trace becomes evidence in compliance audits

- ICT providers (yes, that includes your SaaS vendors) must demonstrate regional data controls

- Failure to comply risks regulatory action and reputational damage

2. The EU Data Act: Portability Is No Longer Optional

Effective September 12, 2025, this legislation mandates true data portability and prohibits vendor lock-in. Cloud providers are already waiving cross-region transfer fees in anticipation.

The immediate impact:

- Your observability architecture must support seamless data migration

- Vendor switching must be technically and economically feasible

- Archive strategies need complete rethinking

The Global Domino Effect

While Europe leads the charge, the rest of the world isn't standing still:

United States: Executive Order 14117 now restricts bulk transfers of sensitive data to specific countries. If your observability vendor routes through restricted regions, you're non-compliant by default.

India: The DPDP framework allows transfers except to blacklisted countries, but that list can change overnight. Build kill-switches now, not during an emergency.

China: Recent relaxations in data export rules create opportunities, but only if you can prove data never touched restricted systems.

The pattern is clear: Every major economy is tightening data sovereignty rules. The question isn't whether you'll need regional data controls, it's whether you'll have them ready in time.

Residency vs. Sovereignty

Here's the expensive mistake teams make: they solve for data residency but completely miss data sovereignty. Understanding the difference can save you from compliance failures and emergency migrations.

Data Residency: The "Where"

Data residency simply means where your data physically lives. Store logs in an EU data center? Congratulations, you have EU data residency. But that's only half the equation.

Data Sovereignty: The "Who" and "How"

Data sovereignty determines who can access your data and under which legal framework. This is where most observability stacks fail spectacularly.

Consider this scenario: Your logs sit in EU-WEST-1, but:

- Encryption keys are managed from a US control plane

- Global support teams can access production data

- Disaster recovery automatically replicates to

US-EAST-1 - "Anonymous" analytics aggregate data globally

You have residency. You don't have sovereignty.

The Hidden Sovereignty Violations

| Surface-Level Compliance | Hidden Violations |

|---|---|

| ✅ EU data centers | ❌ Keys managed globally |

| ✅ Regional endpoints | ❌ Support access from anywhere |

| ✅ Local processing | ❌ Silent cross-border replication |

| ✅ Compliant storage | ❌ Analytics that leak metadata |

In 2025, auditors won't just ask where your data lives, they'll demand proof of who can access it, how it's encrypted, and where every copy exists. Can you provide that proof today?

The Four Ways Retroactive Residency Fails

Trying to add data residency after deployment is like adding foundations after building the house. Here are the four catastrophic failures we see repeatedly:

1. The Silent Replication Trap

Your SaaS provider promises EU hosting, but their high-availability setup automatically mirrors data to US regions. You discover this during an audit six months later.

2. The Support Backdoor

Your data stays regional until something breaks. Then a well-meaning support engineer pulls production logs into a debugging environment halfway around the world. Compliance violation in 30 seconds.

3. The Backup Betrayal

Primary data respects boundaries, but automated backups default to the vendor's cheapest storage region. Your carefully segregated EU data now lives in US-EAST-1's cold storage.

4. The Analytics Leak

"Anonymous" telemetry seems harmless until you realize it includes IP addresses, user agents, and session tokens. That aggregation pipeline? It runs in a single global region.

The harsh truth: These aren't edge cases. They're the default behavior of most observability platforms. Without deliberate design, data residency is an illusion maintained only until someone looks closely.

The Blueprint: Building Sovereign-by-Design Observability

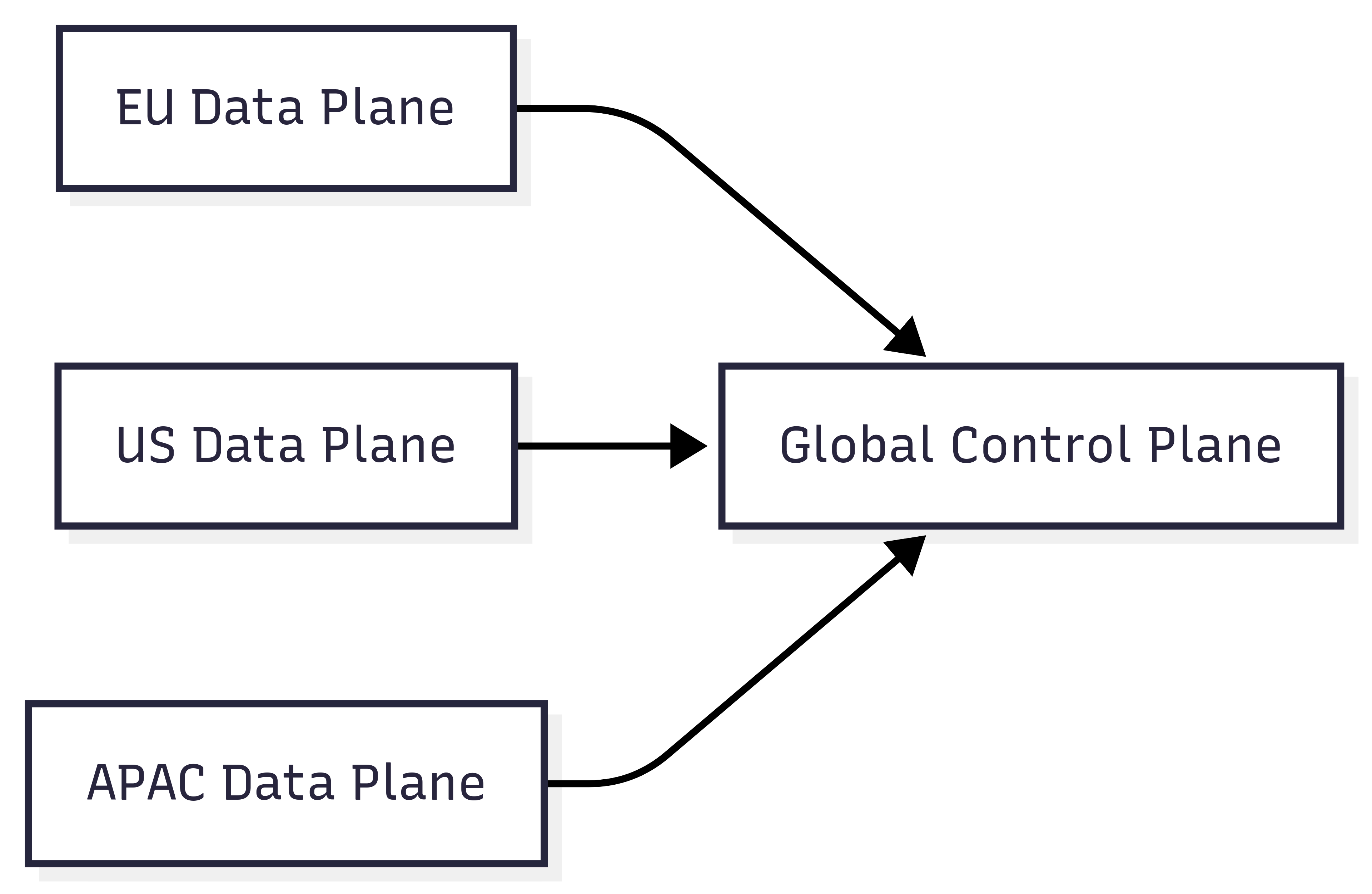

Pattern A: Regional Data Planes with Policy-Aware Federation

The gold standard for data sovereignty: completely isolated regional data planes that never share raw data across borders.

How it works?

- Each region operates independently with its own storage and processing

- The global control plane only handles metadata and aggregated metrics

- Cross-region queries return summaries, never raw telemetry

- Regional failures don't cascade globally

This pattern requires more infrastructure but provides absolute sovereignty guarantees.

Pattern B: Edge-Based Routing with OpenTelemetry

Make residency decisions at the collection point, before data ever leaves its origin region.

Geographic Routing:

Configure your OpenTelemetry collectors to automatically route data based on geographic tags. When telemetry arrives with a tenant region attribute starting with "eu-", it gets sent exclusively to EU exporters. Similarly, data tagged with "us-" prefixes routes only to US infrastructure. This ensures data never accidentally crosses regional boundaries due to misconfiguration or default routing behavior.

PII Redaction at Source:

Before any data leaves the collection point, strip out personally identifiable information directly in the OpenTelemetry processor. Remove sensitive fields like email addresses and social security numbers at the source, ensuring this data never enters your observability pipeline. This approach is far safer than trying to redact data after it's been stored, you can't leak what you never collected.

This pattern ensures sensitive data never leaves its jurisdiction, even temporarily.

Pattern C: Encryption Key Sovereignty

True data sovereignty requires controlling not just where data lives, but who can decrypt it.

The non-negotiables:

- Customer-managed keys (CMEK) in regional key management services

- Hold Your Own Key (HYOK) for maximum control

- Vendor operators must have zero decryption capability

- Key material never crosses jurisdictional boundaries

- Audit logs for every key access

Without key sovereignty, data residency is meaningless, encrypted data in the EU is still compromised if keys are managed from Silicon Valley.

Pattern D: Tiered Retention with Regional Boundaries

Structure your data retention to respect jurisdictional boundaries at every tier. Keep your hot data (last 7 days) in regional S3 for immediate access. Move warm data (8-30 days) to S3 Infrequent Access within the same region for cost optimization. Archive cold data (30+ days) to regional Glacier, ensuring long-term storage never crosses borders.

For disaster recovery, maintain strict regional boundaries. If your primary site is in EU-West-1, your secondary must stay within the EU (like EU-Central-1). Never allow automatic failover to cross regulatory boundaries, no transatlantic replication to US-East-1, regardless of cost savings or latency benefits. The compliance risk far outweighs any operational advantages.

Why Parseable Makes Data Residency Simple

While traditional observability stacks require orchestrating dozens of components across regions, Parseable's single-binary architecture transforms data residency from a complex engineering project into a five-minute deployment.

The Power of One: Single Binary, Total Control

Imagine deploying complete observability infrastructure in any region with just one command:

# Complete regional deployment in under 5 minutes

docker run -p 8000:8000 \

-v /data/eu-west-1:/parseable/data \

-e P_S3_REGION=eu-west-1 \

parseable/parseable:latest

Direct-to-Storage Architecture: Your Data, Your Rules

Parseable writes directly to your S3-compatible storage, eliminating the complex data routing that plagues traditional stacks:

# Complete sovereignty with regional S3

P_S3_URL: https://s3.eu-west-1.amazonaws.com

P_S3_REGION: eu-west-1

P_S3_BUCKET: your-sovereign-logs

What this means:

- Data never transits through vendor infrastructure

- You control encryption keys completely

- Works with any S3-compatible storage (AWS, MinIO, Ceph)

- Zero vendor-managed replication or caching

The Simplicity Advantage

Traditional observability stacks need:

- Metadata services (often globally distributed)

- Separate ingestion, processing, and query layers

- Complex service meshes and load balancers

- Centralized control planes that touch your data

- Support infrastructure with production access

Parseable needs:

- The binary

- Your S3 bucket

- Nothing else

This radical simplicity isn't just elegant, it's what makes true data sovereignty achievable for teams of any size.

How Parseable Compares: A Reality Check

| Platform | Architecture | Data Residency Reality | Parseable's Approach |

|---|---|---|---|

| Elastic Cloud | Multi-component cluster | Multiple nodes to coordinate Cross-cluster search complexity Snapshot shipping crosses borders | Single binary = single deployment No coordination overhead Direct regional S3 writes |

| Datadog | SaaS-only | Your data leaves your infrastructure Limited regional presence Vendor controls all replication | Self-host anywhere you need Your infrastructure, your control No vendor replication |

| Splunk | Heavy forwarders + indexers | Complex forwarding rules License servers phone home Bucket replication maze | No forwarding layer needed No license server callbacks Simple S3 lifecycle policies |

| New Relic | Agent-based SaaS | Agents send data externally Limited EU presence Vendor-managed everything | OpenTelemetry-native Deploy in any region Your storage, your control |

| Grafana Stack | Loki + Tempo + Mimir + Grafana | 4+ components to configure Each needs residency setup Complex federation required | One binary, one configuration Unified setup No federation complexity |

Real-World Deployment Patterns That Actually Work

Pattern 1: Complete Regional Isolation

Deploy completely independent Parseable instances in each region with zero cross-talk. Your EU deployment runs in EU-West-1 with its own S3 bucket for EU telemetry. Your US deployment operates separately in US-East-1 with dedicated US storage. APAC runs independently in AP-Southeast-1. Each instance is a sovereign island, no shared infrastructure, no hidden dependencies, no accidental data leakage. This pattern guarantees absolute compliance but requires managing multiple deployments.

Pattern 2: Smart Edge Routing

Use OpenTelemetry Collectors at your edge to intelligently route data before it leaves its origin. Configure collectors to examine region attributes on incoming telemetry and automatically direct EU-tagged data to EU Parseable endpoints while US-tagged data goes to US infrastructure. This approach prevents data from ever crossing jurisdictional boundaries accidentally. The routing happens at collection time, not after storage, ensuring compliance from the first millisecond.

Pattern 3: Hybrid Cloud-On-Premises

For organizations with strict data locality requirements, run Parseable on-premises for sensitive data while using cloud deployments for less sensitive telemetry. Your on-premises instance uses local storage volumes, keeping critical data within your physical control. This pattern works perfectly for financial services that need certain data to never leave their data centers while still leveraging cloud economics for general observability.

Security and Compliance: Built-In, Not Bolted-On

Granular Access Control

Implement role-based access control that respects regional boundaries. Create separate roles for EU analysts who can only access EU logs and metrics, while US analysts are restricted to US data streams. Each role gets precisely defined permissions—read-only access for analysts, write access for regional admins. This ensures that even with valid credentials, users can't accidentally or intentionally access data from other jurisdictions.

Audit Trail

Maintain comprehensive audit logs that track every data access attempt. Know exactly who queried what data, from which IP address, and when. This isn't just about compliance—it's about proving compliance. When auditors ask "Can you show us who accessed EU production logs last week?", you have immediate, queryable answers. Filter by stream, time range, user, or any combination to demonstrate complete visibility into data access patterns.

The Economics of Simplicity

| Aspect | Traditional Stack | Parseable |

|---|---|---|

| Infrastructure | 10+ containers/VMs per region | 1 container per region |

| Operational Overhead | Multiple teams for different components | Single binary to manage |

| Network Costs | Inter-service communication | Direct S3 writes |

| Licensing | Per-node/per-core pricing | Simple, transparent pricing |

| Compliance Audits | Complex component mapping | Single deployment to audit |

Deploy Your First Regional Instance in 4 Steps

Step 1: Choose Your Region

Start by identifying which AWS region aligns with your compliance requirements. List available S3 regions and select one that keeps your data within the required jurisdiction. For EU compliance, choose EU-West-1 (Ireland) or EU-Central-1 (Frankfurt). This decision is permanent for each deployment, so choose carefully.

Step 2: Deploy Parseable

Create a regional S3 bucket specifically for your telemetry data in your chosen region. Then deploy Parseable using Helm, configuring it to use your regional bucket. The deployment automatically respects the bucket's region, ensuring data never leaves that jurisdiction. Disable local persistence since you're using S3 as your storage backend.

Step 3: Configure OpenTelemetry

Point your OpenTelemetry exporters to your regional Parseable endpoint. Add region headers to all telemetry to ensure proper routing and compliance tracking. This configuration ensures that data from specific regions only flows to the appropriate Parseable instance, maintaining strict geographic boundaries.

Step 4: Verify Data Residency

After deployment, verify your data residency configuration. Check that your S3 bucket is in the correct region and confirm no cross-region replication rules exist. This verification step is crucial—many compliance failures occur because teams assume their configuration is correct without actually verifying it. Document these verification results for your compliance records.

Making It Real: Implementation Best Practices

The Five Commandments of Data Sovereignty

-

Regional ingestion endpoints: Route EU traffic to EU collectors, APAC to APAC. Never cross streams.

-

Enrich before storing: Add

regionanddata_residencytags at collection time, not during queries. -

Redact at the source: Strip PII in OpenTelemetry processors before data touches disk. Recovery is impossible if you never store it.

-

Sample with boundaries: High-value traces stay local. Only aggregates cross borders.

-

Test your exits: Regularly export and re-import data. If you can't leave, you don't have sovereignty.

Follow these principles, and your incident responders get the visibility they need without compliance nightmares.

Evaluating Observability Vendors: The Questions That Matter

When assessing any observability solution for data residency, ask these five critical questions:

-

"Show me your data flow diagram." If they can't map exactly where data goes, walk away.

-

"What happens during support escalation?" The answer reveals whether they truly understand sovereignty.

-

"How do I export everything and leave?" Vague answers mean vendor lock-in.

-

"Where are your encryption keys managed?" "In the cloud" isn't good enough.

-

"What data crosses borders for 'analytics'?" Anonymous doesn't mean compliant.

Three Real-World Scenarios You Can Implement Today

Scenario 1: EU Financial Services (DORA Compliance)

Compliance Requirements mapped to implementation

- Compliance_Need: DORA Article 16 (ICT Risk Management)

- Data_Location: EU-only processing and storage

- Key_Management: Regional KMS with audit trails

- Disaster_Recovery: Intra-EU failover only

Parseable deployment

docker run -p 8000:8000 \

-e P_S3_REGION=eu-west-1 \

-e P_S3_BUCKET=eu-fintech-logs \

parseable/parseable:latest

Why this works: Single binary deployment ensures no hidden data paths. Your data never leaves the EU, period.

Scenario 2: India SaaS Platform (DPDP Compliance)

Compliance Requirements mapped to implementation

- Compliance Need: DPDP negative list compliance

- Kill Switch: Instant transfer termination

- Geography Labels: Mandatory on all telemetry

Parseable deployment

docker run -p 8000:8000 \

-e P_S3_REGION=ap-south-1 \

parseable/parseable:latest

Why this works: Parseable's routing can be updated in real-time as regulations change. No redeployment needed.

Scenario 3: China Operations (PIPL Compliance)

Compliance Requirements mapped to implementation

- Compliance Need: PIPL Article 38 (local storage)

- Cross Border: Disabled by default

- Data Categories: Classified at ingestion

Parseable deployment

docker run -p 8000:8000 \

-e P_S3_URL=https://oss-cn-beijing.aliyuncs.com \

-e P_S3_REGION=cn-north-1 \

parseable/parseable:latest

Why this works: Complete isolation by default. Cross-border transfers require explicit configuration changes.

The Bottom Line: Sovereignty Through Simplicity

In 2025, data residency isn't optional—it's existential. Every day you delay implementing proper controls increases your compliance risk exponentially.

The traditional approach requires months of planning, complex multi-region architectures, and constant vigilance against data leakage. It's expensive, fragile, and often fails when you need it most.

The Parseable Difference: Complexity Eliminated

Parseable's single-binary architecture transforms an impossible problem into a trivial deployment:

Instead of: Orchestrating dozens of services across regions, managing complex federation, and hoping nothing leaks across borders...

You get: One binary, one configuration, complete sovereignty.

| The Old Way | The Parseable Way | Time Saved |

|---|---|---|

| Multi-week architectural review | 5-minute Docker deployment | 3 weeks |

| Complex service mesh configuration | Single binary, zero mesh | 2 weeks |

| Cross-region federation setup | No federation needed | 1 week |

| Vendor compliance questionnaires | You control everything | Ongoing |

| Hidden replication discovery | Direct S3, no surprises | Priceless |

Conclusion: The Clock Is Ticking

Every major economy is implementing data sovereignty regulations. DORA is already in effect. The EU Data Act arrives in September. Your competitors are already moving.

The question isn't whether you need regional data controls—it's whether you'll implement them proactively or during a compliance emergency.

The Choice Is Clear

Option 1: The Traditional Path

- Spend months architecting complex multi-region systems

- Deploy dozens of components per region

- Hope your vendor's "compliance features" actually work

- Discover violations during your next audit

- Scramble to fix them under regulatory pressure

Option 2: The Parseable Path

- Deploy complete observability in any region in 5 minutes

- One binary eliminates entire categories of compliance failures

- You control the data, the keys, and the infrastructure

- Pass audits with confidence

- Sleep soundly knowing you're compliant

Your Next Action

If you've read this far, you understand the stakes. Every day of delay increases your risk. Here's what to do right now:

- Audit your current stack: Where does your observability data actually live today?

- Identify violations: Which regulations are you already breaking?

- Deploy Parseable: Start with one region, prove the concept, expand globally.

The beauty of Parseable's approach? You can start small. Deploy in one region this afternoon. Prove compliance. Then expand. No massive migration. No multi-month projects. Just immediate, demonstrable progress.

The Future Belongs to the Sovereign

Organizations that control their observability data will:

- Win more enterprise deals (compliance as a competitive advantage)

- Reduce operational costs by 10x (no SaaS premiums)

- Respond to new regulations in hours, not months

- Build trust with customers who care about data privacy

- Avoid the catastrophic fines that are coming

Start Your Data Sovereignty Journey

Deploy Parseable in 5 Minutes:

curl -fsSL https://logg.ing/install | bash

Every day without proper data sovereignty is a day closer to a compliance crisis. Start now.